Last week I attended a workshop on how to run highly parallel distributed jobs on the Open Science Grid (osg). There I met Derek Weitzel who has made an excellent contribution to advancing R as a high performance computing language by developing BoscoR. BoscoR greatly facilitates the use of the already existing package “GridR” by allowing the R user to use Bosco to manage the submission of jobs. It seems no matter how many kinds of queue-submission system I become familiar with (torque,sge,condor), the current cluster I’m working on uses something foreign and so I have to relearn how to write a job submission file. One of the two major selling points of Bosco is that it allows the user to write one job submission file locally (based on HTCondor) and use it to submit jobs on various remote clusters all using different interfaces. The second major selling point is that Bosco will manage work sharing if you have access to more than one cluster, that is it will submit jobs to each cluster proportional to how unburdened that cluster is, which is great if you have access to 3 clusters. It means the users apply jobs will get through the queue as quickly as possible by cleverly distributing the work over all available clusters. Hopefully that will have convinced you that Bosco is worth having, now lets proceed with how to use it. I will illustrate the process by using Duke University’s cluster, the DSCR. There are three steps: 1) Installing Bosco 2) Installing GridR 3) Running a test job.

Installing Bosco

First go ahead and download Bosco, the sign-up is only for the developers to get an idea of how many people are using it. Detailed install instructions can be found here but I will also go through the steps.

[lindon@laptop Downloads]$ tar xvzf ./bosco_quickstart.tar.gz [lindon@laptop Downloads]$ ./bosco_quickstart

The executable will then ask some questions:

Do you want to install Bosco? Select y/n and press [ENTER]: y

Type the cluster name and press [ENTER]: dscr-login-01.oit.duke.edu

When prompted “Type your name at dscr-login-01.oit.duke.edu (default YOUR_USER) and press [ENTER]: NetID

When prompted “Type the queue manager for login01.osgconnect.net (pbs, condor, lsf, sge, slurm) and press [ENTER]: sge

Then when prompted “NetID@dscr-login-01.oit.duke.edu’s password: XXXXXXX

For duke users, the HostName of the DCSR is dscr-login-01.oit.duke.edu. You login with your NetID and the queue submission system is the Sun Grid Engine, so type sge. If you already have SSH-Keys set up then I think the last question gets skipped. That takes care of the installation. You can now try submitting on the remote cluster locally from your laptop. Download this test executable and this submission file. Start Bosco and try submitting a job.

[msl33@hotel ~/tutorial-bosco]$ source ~/bosco/bosco_setenv [msl33@hotel ~/tutorial-bosco]$ bosco_start BOSCO Started [msl33@hotel ~/tutorial-bosco]$ condor_submit bosco01.sub Submitting job(s). 1 job(s) submitted to cluster 70. [msl33@hotel ~/tutorial-bosco]$ condor_q -- Submitter: hotel.stat.duke.edu : <127.0.0.1:11000?sock=21707_cbb6_3> : hotel.stat.duke.edu ID OWNER SUBMITTED RUN_TIME ST PRI SIZE CMD 70.0 msl33 8/31 12:08 0+00:00:00 I 0 0.0 short.sh 1 jobs; 0 completed, 0 removed, 1 idle, 0 running, 0 held, 0 suspended

This is the result if all has worked well. Note that you need to start Bosco by the above two lines.

Installing GridR

The current version of GridR on CRAN is an older version doesn’t support job submission by bosco. It will when CRAN gets the latest version of GridR but until then you need to install GridR from source so download it here and install it:

install.packages("~/Downloads/GridR_0.9.7.tar.gz", repos=NULL, type="source")

Running a Parallel Apply on the Cluster

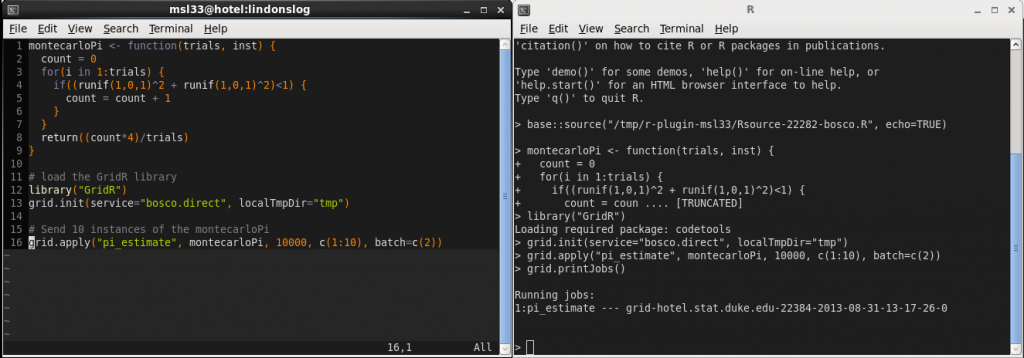

Consider a toy example which approximates pi by monte-carlo.

montecarloPi <- function(trials, inst) {

count = 0

for(i in 1:trials) {

if((runif(1,0,1)^2 + runif(1,0,1)^2)<1) {

count = count + 1

}

}

return((count*4)/trials)

}

One can now use grid.apply from the GridR package combined with Bosco to submit jobs on the remote cluster from within the users local R session.

# load the GridR library

library("GridR")

grid.init(service="bosco.direct", localTmpDir="tmp")

# Send 10 instances of the montecarloPi

grid.apply("pi_estimate", montecarloPi, 10000000, c(1:10), batch=c(2))

You can then see how your jobs are getting on by the “grid.printJobs()” command.

When it completes, “pi_estimate” will be a list object with 10 elements containing approximations to pi. Obviously, there is an overhead with submitting jobs and also a lag time while these jobs get through the queue. One must balance this overhead with the computational time required to complete a single iteration of the apply function. Bosco will create and submit a job for every iteration of the apply function. If each iteration does not take too long but there exists a great many of them to perform, one could consider blocking these operations into, say, 10 jobs so that the queue lag and submission overhead is negligible in comparison to the time taken to complete no_apply_iteraions/10 computations, which also saves creating a large number of jobs on the cluster which might aggravate other users. One can also add clusters to bosco using the “bosco_cluster –add” command, so that jobs are submitted to whichever cluster has the most free cores available. All in all this is a great aid for those doing computationally intensive tasks and makes parallel work-sharing very easy indeed.